Polls apart: What the numbers are telling us, and why so many get it so wrong

Week three of the campaign brings new polls, showing what’s a persistent lead to Labor despite commentary saying Anthony Albanese is in trouble. Dennis Atkins explains how the polls are working in 2022.

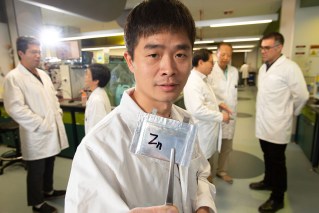

The failure of opinion polls to predict the shock result of the last federal election has raised doubts about their methodology. (Photo: ABC)

At the start of the third – critical – week of this campaign, it’s worth taking a moment to give opinion polls some consideration.

New data from Ipsos in The Financial Review and Newspoll in The Australia says the Labor Party’s six to 10 point lead is staying steady, putting Anthony Albanese on a supposed path to victory.

Is this more of the same or can the polls in 2022 be trusted?

Three years ago, the polls copped the blame for everyone waking up surprised on May 20, 2019 and finding Scott Morrison and the Coalition re-elected.

Australia’s leading online bookies had declared Bill Shorten and Labor the winner, paying out all ALP bets days before the first votes were counted. Pundits told everyone to put down their political binoculars and an exit poll at 6pm on Election Day said Morrison had lost.

Within 48 hours of all that happening, everyone sheeted home collective blame to the pollsters and said we should never take any notice of them again.

Now, three years on we are back at it, fixating on the polls with the whispered caveat about the pollsters getting it wrong in 2019 but having now mended their broken systems and made themselves more reliable.

Unpacking what occurred in 2019, a couple of things need to be made clear.

The polls were not as wrong as everyone thinks they were three years ago. It’s true all of the published polls predicted a Labor victory which looks superficially like a massive fail.

However, the miss in almost all cases was within what’s called the margin of error. This is the calculated chance of the poll being wrong – a margin of error of plus or minus five percent says that one in 10 times the survey in question will be way out.

A margin of error of 2 to 3 percent (which is what most polls with a sample of 1200 to 1400 respondents contains) narrows that to one in 20 or 25 surveys is probably an outlier and mistaken.

Also, pollsters explain the technical (very nerdy) nature of the work means the margin of error itself has a margin of error – eventually all this disappears into the ninth moon of uranus.

For a top line MoE of three points, the surveys right up to Election Day in 2019 were close enough but not perfect, especially in calculating the preferred vote.

The greater errors in 2019 were in the primary vote which, in the case of Labor in particular, was overstated. Most of this was still within the accepted MoE for most surveys but, as we saw, contributed to a general misconception about who had the better chance of winning.

Another point is just about all of the published polls in 2019 were national surveys which by definition do not measure what’s happening in individual seats.

The major parties conduct tracking polls which test opinion in a basket of seats – usually 20 or so – selected for their “must win/must defend” status and any “representative nature” in the broader landscape.

Three years ago senior figures from the Labor and Coalition campaigns were looking at similar views of where the votes were and how they were moving. They knew there was a swing to the Coalition in the last 10 to 14 days, it was happening in places essential for a Liberal/Nationals victory and Labor had some structural problems that were inescapable.

Bill Shorten’s leadership had gone from being a concern to a figure of fear for voters and the Labor policy mix was too much and too ill-defined to clear the hurdles of clarity and calm.

Pollsters have fine-tuned how they sample opinion – they have given more weight to education qualification after a finding in a review concluded too many of the surveys were missing voters aged 24 to 39 who hadn’t undertaken tertiary studies.

The way the surveys themselves have been conducted has been sharpened over the last three years with the major pollsters ditching the dodgy practice of using “robo-polls” which are carried out by automated phone banks asking respondents to press numbers to express preferences.

Pollsters now use a mix of direct phone surveying and established online panels – these can be either entirely random or people placed on regularly sampled lists.

This side of opinion polling has itself become a sub-industry. Panel brokers buy lists of people, weighted demographically and geographically, who can be contacted and then sell them to the pollsters.

This has happened because it is getting harder and harder to get a suitable sample. At the last state election in Queensland a poll for the metropolitan electorate of South Brisbane sought the views of 500 people found in making more than 6000 calls – that’s a hang up rate of more than nine in every 10 attempts.

The business of getting these voter panels together is also becoming more and more specialised. There are only a handful of people who assemble and broker the panels – for example, in Queensland all stand-alone surveys for pollsters are purchased from one company.

People who sign up for panels are usually paid (not much) and some pollsters establish their own in-house operations. The biggest pollster operating in Australia – YouGov, which compiles both Newspoll and is the main survey outfit for the Labor Party – signs people up and they then accumulate points which can be converted to small ($50 or $100) amounts or used for charity donations.

Take all this into consideration when looking at published polls in coming weeks. The good polls will have at least four essential pieces of information – the sample size, the dates the surveys were conducted, the margin or error and the method used for sampling (direct phone contact, online panels or a mix).

The best pollsters for explaining their methodology are Ipsos and YouGov (both big international companies with deep pockets and treasured brands). YouGov, which worked hard to improve transparency in Australia after the 2019 election, provides the most detail.

The Nine newspapers pollster Resolve has a handy Q&A with its tables but some aspects of the methodology are a little unclear.

Overall, if any poll is missing just one of these measures of quality, ignore them. They are almost certainly less reliable. If they are missing all of the above, turn to the comics and get a reality check.